Recently, I encountered a situation where I had to send concurrent requests to check the behaviour of REST APIs on a web server. As part of my exploration, I picked up 3 tools: Postman, Apache Bench (ab), and seq + curl command combo. Through this blog, I would like to share how to use each of them and my experience with them.

For our demo purposes, I have set up a simple Flask app server locally on my system using Python, so that we can send requests to it. I used the following code [Anyone who wants to use Flask to deploy a simple server can refer to this link]:

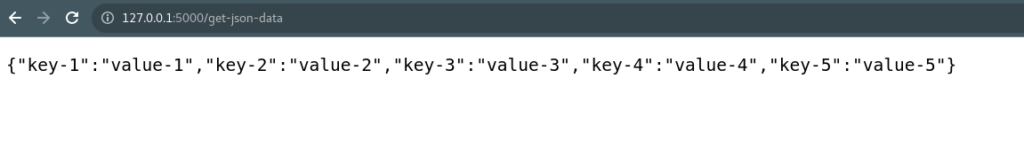

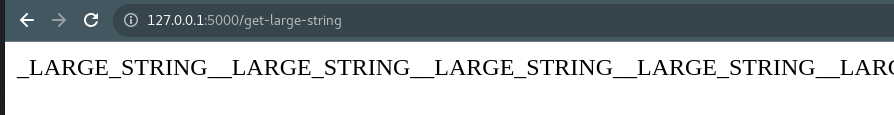

How it looks in my browser:

How it is looking in my terminal:

root:bin# flask run

* Serving Flask app 'app.py'

* Debug mode: off

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on http://127.0.0.1:5000

Press CTRL+C to quit

data: {'data-1-key-1': 'value-1', 'data-1-key-2': 'value-2', 'data-1-key-3': 'value-3', 'data-1-key-4': 'value-4', 'data-1-key-5': 'value-5'}

127.0.0.1 - - [12/May/2023 18:41:37] "GET /get-json-data HTTP/1.1" 200 -

large-string: _LARGE_STRING__LARGE_STRING__LARGE_STRING__LARGE_STRING__LARGE_STRING__LARGE_STRING__LARGE_STRING__LARGE_STRING_

127.0.0.1 - - [12/May/2023 18:41:43] "GET /get-large-string HTTP/1.1" 200 -

Tools that I have explored in this blog:

a. Postman

b. Apache Jmeter

c. seq + curl commands.

d. Other options

We will discuss each tool one by one in the upcoming series.

1. Postman

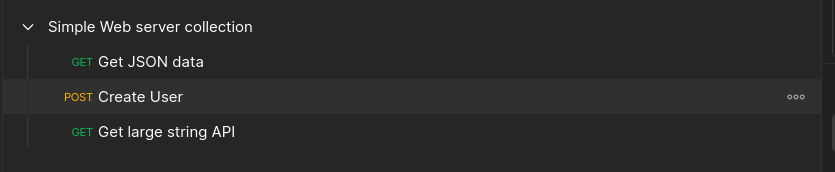

This is a popular tool among developers for testing REST APIs. Running concurrent requests within Postman requires you to put your requests into a collection. As the name suggests, you can put several APIs into a collection.

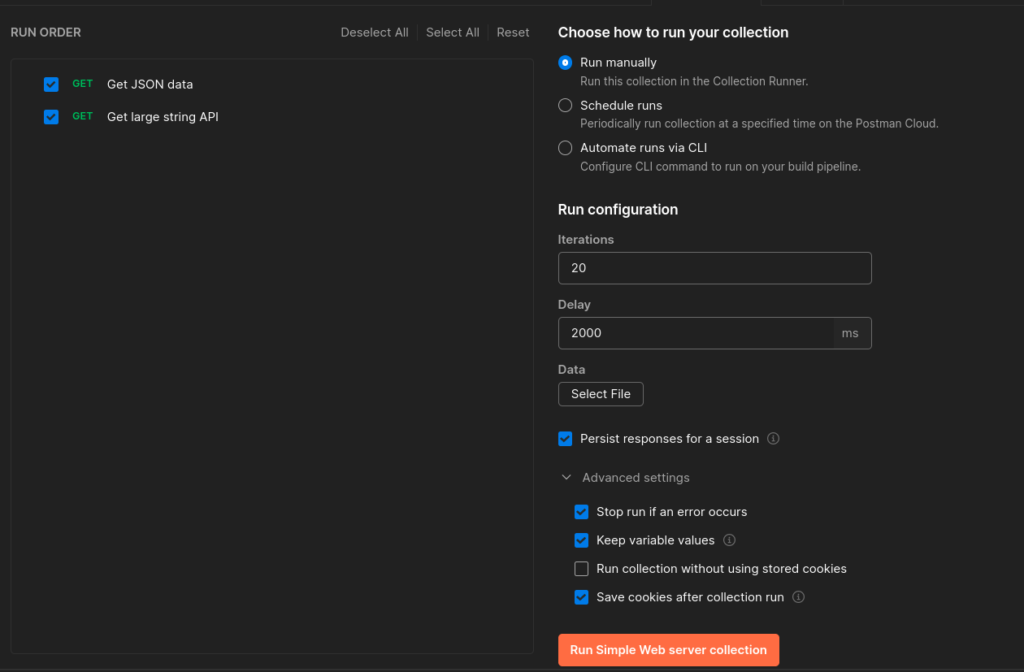

For running a particular collection, we need to Click on the 3 dots and click “Run Collection”. Once we do that we see following set of options:

For demo purposes, I have selected 20 iterations with a delay of 2 seconds. I also like to see the responses so I have selected “Persist responses for a session”. Click “Run Simple Web server collection” to trigger the runs.

For running concurrently, one can run instances of the same or different collections in parallel.

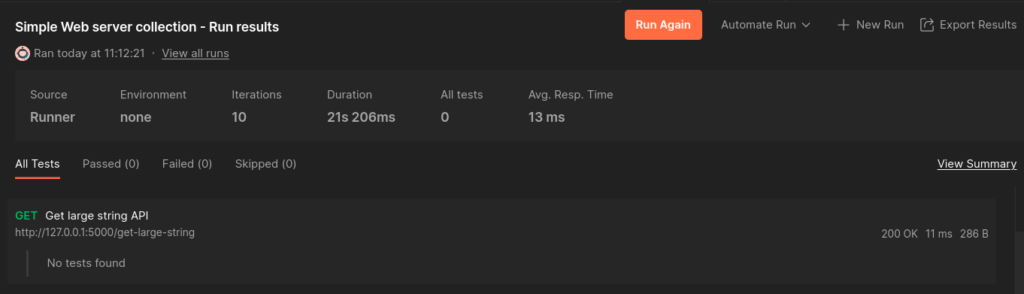

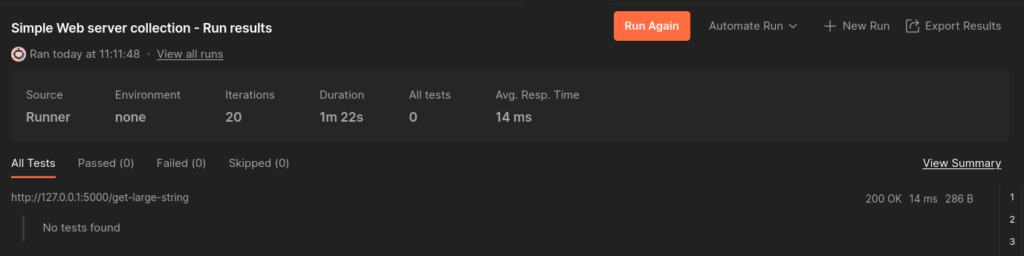

I triggered two instances and here are the respective results:

Pros: Ton of options for customizing your runs. Postman really has a wealth of options when you want to run collections.

Cons: For the Free plan, you can only run 25 collections per month and for the basic plan, you can run 250 collections per month. More about this here.

2. Apache Benchmark

ab is a tool for benchmarking your Apache Hypertext Transfer Protocol (HTTP) server. It is designed to give you an impression of how your current Apache installation performs. This especially shows you how many requests per second your Apache installation is capable of serving.

A quick glance at the ab tool CLI’s help gives us the following output:

root:~# ab -h

Usage: ab [options] [http[s]://]hostname[:port]/path

Options are:

-n requests Number of requests to perform

-c concurrency Number of multiple requests to make at a time

-t timelimit Seconds to max. to spend on benchmarking

This implies -n 50000

-s timeout Seconds to max. wait for each response

Default is 30 seconds

-b windowsize Size of TCP send/receive buffer, in bytes

-B address Address to bind to when making outgoing connections

-p postfile File containing data to POST. Remember also to set -T

-u putfile File containing data to PUT. Remember also to set -T

-T content-type Content-type header to use for POST/PUT data, eg.

'application/x-www-form-urlencoded'

Default is 'text/plain'

-v verbosity How much troubleshooting info to print

-w Print out results in HTML tables

-i Use HEAD instead of GET

-x attributes String to insert as table attributes

-y attributes String to insert as tr attributes

-z attributes String to insert as td or th attributes

-C attribute Add cookie, eg. 'Apache=1234'. (repeatable)

-H attribute Add Arbitrary header line, eg. 'Accept-Encoding: gzip'

Inserted after all normal header lines. (repeatable)

-A attribute Add Basic WWW Authentication, the attributes

are a colon separated username and password.

-P attribute Add Basic Proxy Authentication, the attributes

are a colon separated username and password.

-X proxy:port Proxyserver and port number to use

-V Print version number and exit

-k Use HTTP KeepAlive feature

-d Do not show percentiles served table.

-S Do not show confidence estimators and warnings.

-q Do not show progress when doing more than 150 requests

-l Accept variable document length (use this for dynamic pages)

-g filename Output collected data to gnuplot format file.

-e filename Output CSV file with percentages served

-r Don't exit on socket receive errors.

-m method Method name

-h Display usage information (this message)

-I Disable TLS Server Name Indication (SNI) extension

-Z ciphersuite Specify SSL/TLS cipher suite (See openssl ciphers)

-f protocol Specify SSL/TLS protocol

(SSL2, TLS1, TLS1.1, TLS1.2 or ALL)

-E certfile Specify optional client certificate chain and private key

For demo purposes, we will see 5 options within the ab tool:

-n requests Number of requests to perform

-c concurrency Number of multiple requests to make at a time

-t timelimit Seconds to max. to spend on benchmarking

This implies -n 50000

-s timeout Seconds to max. wait for each response

Default is 30 seconds

-g filename Output collected data to gnuplot format file.

Let me try to show each option one by one:

1. If you use only the -n option, e.g:

ab -n 100 http://127.0.0.1:5000/get-json-data

This means that you want to send 100 requests to the web server sequentially.

2. If you use -c with -n option, e.g:

ab -n 100 -c 10 http://127.0.0.1:5000/get-json-data

This means that you want to send 100 requests to the web server but at a given time send 10 concurrent requests.

3. If you use -n, -s and -c options together e.g:

ab -n 100 -c 10 -s 3 http://127.0.0.1:5000/get-json-dataThis means that you want to send 100 requests to the web server but at a given time send 10 concurrent requests. For each request, the maximum time to return a response is 3 seconds.

4. If you use -n, -s, -c, and -t options together e.g:

ab -n 100 -c 10 -s 3 -t 5 http://127.0.0.1:5000/get-json-dataThis means that you want to send 100 requests to the web server but at a given time send 10 concurrent requests. For each request, the maximum time to return a response is 3 seconds. Continue firing the requests till 5 seconds are complete even if 100 requests got completed.

5. Using option -g we can specify the output file as well.

Finally firing our ab request:

root:~# ab -n 100 -c 10 -t 5 -s 3 -g "flask_5_seconds_requests.txt" http://127.0.0.1:5000/get-json-data

This is ApacheBench, Version 2.3 <$Revision: 1843412 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 127.0.0.1 (be patient)

Finished 1867 requests

Server Software: Werkzeug/2.3.4

Server Hostname: 127.0.0.1

Server Port: 5000

Document Path: /get-json-data

Document Length: 127 bytes

Concurrency Level: 10

Time taken for tests: 5.001 seconds

Complete requests: 1867

Failed requests: 0

Total transferred: 549541 bytes

HTML transferred: 238125 bytes

Requests per second: 373.31 [#/sec] (mean)

Time per request: 26.787 [ms] (mean)

Time per request: 2.679 [ms] (mean, across all concurrent requests)

Transfer rate: 107.31 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.4 0 6

Processing: 12 26 5.7 26 61

Waiting: 1 12 5.2 11 44

Total: 12 27 5.7 26 61

Percentage of the requests served within a certain time (ms)

50% 26

66% 28

75% 30

80% 30

90% 34

95% 37

98% 41

99% 43

100% 61 (longest request)

To do POST call with ab:

1. Make a file with POST body contents:

root:~# cat post_data.txt

{

"username" : "abh",

"password" : "abh"

}2. Fire request like this:

root:~# ab -n 100 -c 10 -s 3 -p post_data.txt http://127.0.0.1:5000/create-user

This is ApacheBench, Version 2.3 <$Revision: 1843412 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 127.0.0.1 (be patient).....done

Server Software: Werkzeug/2.3.4

Server Hostname: 127.0.0.1

Server Port: 5000

Document Path: /create-user

Document Length: 51 bytes

Concurrency Level: 10

Time taken for tests: 0.434 seconds

Complete requests: 100

Failed requests: 0

Total transferred: 22400 bytes

Total body sent: 19100

HTML transferred: 5100 bytes

Requests per second: 230.36 [#/sec] (mean)

Time per request: 43.411 [ms] (mean)

Time per request: 4.341 [ms] (mean, across all concurrent requests)

Transfer rate: 50.39 [Kbytes/sec] received

42.97 kb/s sent

93.36 kb/s total

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.5 0 3

Processing: 15 41 12.5 40 75

Waiting: 2 26 12.4 24 57

Total: 16 42 12.5 41 75

Percentage of the requests served within a certain time (ms)

50% 41

66% 45

75% 48

80% 52

90% 60

95% 68

98% 70

99% 75

100% 75 (longest request)

root:~#

Pros: If someone is looking to get how many requests can be handled by your server with the percentage of requests being served in a certain time, it’s a good one.

Cons: It could be tricky to figure out this tool if you want to see the output of GET calls as well.

3. seq + xargs + curl

Another simple option for someone looking out to send parallel requests is a combination of seq + xargs and curl command.

To send GET requests: [Here we are sending a total of 10 requests with 5 requests in parallel]

root:~# seq 1 10 | xargs -Iname -P5 curl --location 'http://127.0.0.1:5000/get-json-data'

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

{"data-1-key-1":"value-1","data-1-key-2":"value-2","data-1-key-3":"value-3","data-1-key-4":"value-4","data-1-key-5":"value-5"}

To include headers append headers like:

root:~# seq 1 200 | xargs -Iname -P20 curl --location 'https://<URL>' \

--header 'x-auth-token: <token>' \

--header 'Content-Type: application/json' --insecureTo send POST requests:

root:~# seq 1 10 | xargs -Iname -P5 curl --location 'http://127.0.0.1:5000/create-user' --data '{ "username": "abh", "password": "pwd"}'

{"password":"pwd","user1":"abh"}

{"password":"pwd","user2":"abh"}

...

...

{"password":"pwd","user8":"abh"}

{"password":"pwd","user9":"abh"}

{"password":"pwd","user10":"abh"}

Pros: Since this does not require any tools to be installed, it’s a quick way to send concurrent requests. You can use the power of curl options to record data to a file as well.

Cons: Anyone looking to take a peek at the finer details of the request should pick up other tools.

4. Other options

One of the prominent ones out there is Apache Jmeter which I tried to explore but was not able to load the UI itself. It seemed confusing to me since it requires you to create test plans first from GUI and then trigger the runs from CLI. Other tools include httperf, locust etc which I will explore if the need arises.